![featured-evorail]()

One of the big announcements of VMworld 2014 is the introduction of VMware's EVO product line, including its first offering EVO:RAIL. After the announcement of EVO:RAIL several sessions popped up in agenda, focussing on this new product:

- SDDC1337 - Technical Deep Dive on EVO:RAIL, the new VMware Hyper-Converged Infrastructure Appliance

- SDDC1767 - SDDC at Scale with VMware Hyper-Converged Infrastructure: Deeper Dive

- SDDC1818 - VMware Customers discuss the new VMware EVO:RAIL and how Hyper-Converged Infrastructure is impacting how they manage and deploy Software Defined Infrastructure

- SDDC2095 - Overview of EVO:RAIL: The Radically New Hyper-Converged Infrastructure Appliance 100% Powered by VMware

- SDDC3245-S - Software-Defined Data Center through Hyper-Converged Infrastructure

- SPL-SDC-1428 - VMware EVO:RAIL Introduction (Hands-on Lab)

If you have access to vmworld.com, these sessions are expected to be published in about two weeks. If you're at VMworld now, you now where to go!

This article summarises what I've learned from the EVO:RAIL sessions I've attended at VMworld. So read on if you want to know more about hyper-converged in general and VMware's EVO:RAIL offering.

What is hyper-converged at how does this relates to EVO:RAIL?

Let's first take a look of what EVO:RAIL actually is. EVO:RAIL is VMware's hyper-converged solution. Hyper-converged refers to bringing together compute, storage and network in one box.

Let's compare three options when you're building an infrastructure and see how hyper-converged is different:

- Build-your-own: A custom build solution based on the best brands available in market selected by you as a customer. For example you choose to run HP servers, with NetApp storage and Cisco switching.

- Converged infrastructure: A converged infrastructure is a pre-defined combination of hardware, mostly available as one SKU through a single vendor and/or single point of contact. The vendor offers validated the design which guarantees correct functioning of the solution. With a converged infrastructure you're using standard components (servers, network, storage/SAN). Popular examples are Cisco/NetApp FlexPod/ExpressPod, VBlock by Cisco/EMC/VMware and UPC by Hitachi.

- Hyper-converged is the new kid on the block. With hyper-converged you put computer + storage and network in some perspective in one box. For the storage part you're leveraging what is called "server-SAN". A Server-SAN uses the local disks in your servers to provide shared storage. Examples of hyper-converged solutions are Nutanix, Simplivity and VMware's EVO:RAIL.

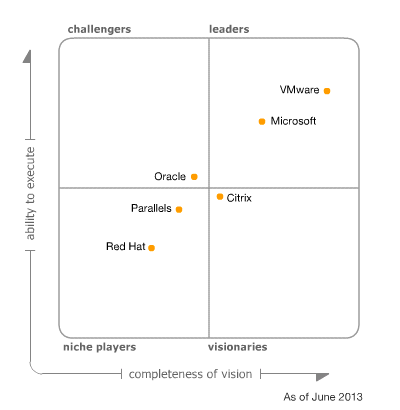

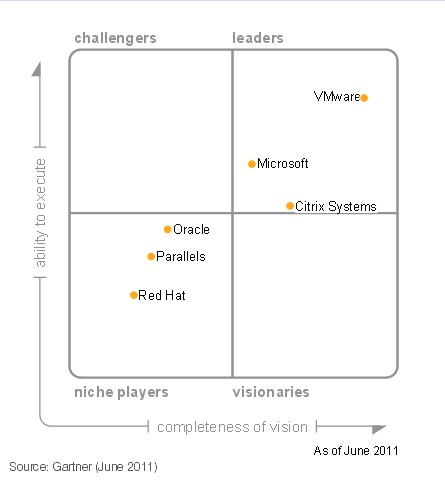

To get an idea of the (hyper-)converged market take a look at Gartner's Magic Quadrant for converged infrastructures:

![mq-converged]()

Unfortunately Gartner sees converged and hyper-converged as one solution in this MQ, but at least it gives you an idea of the players in the market. Notice that EVO:RAIL is not in the MQ, because this MQ is back from June 2014.

Regarding hyper-converged it's important to notice that these solutions where first a pre-defined combination of the hardware and software. This is now changing with Nutanix offering its software as a separate solution. VMware's EVO:RAIL is also software-only, VMware is working together with hardware partners for a complete solution. Simplivity is using rebranded DELL hardware, but will also be available on Cisco hardware in the future.

Advantages of converged are the ease of procurement (everything available through a single SKU/single point of contact), reduced complexity and the predictability of the infrastructure. With hyper-converged you use even more standard (small) building blocks which are easy to manage, have a scale-out approach and a faster time to market.

Disadvantages of (hyper-)converged is the lack of customisation, specifically if your infrastructure requires some special configuration settings. The building blocks have to fit the load you're planning to run on the (hyper-)converged infrastructure (rigid configuration). You might face a vendor lock-in. Read this article for more information: To Converge Infrastructure or Not - That's the question.

VMware EVO:RAIL Hardware Specifications

![evorail]() This takes me to next point, what hardware is currently available and what are the hardware requirements for EVO:RAIL?

This takes me to next point, what hardware is currently available and what are the hardware requirements for EVO:RAIL?

Currently EVO:RAIL is available on Dell, EMC, Fujitsu, Inspur, Supermicro and Net One hardware. Big names missing here are of course Cisco, HP and IBM. What these guys are up to I don't know, although Cisco announced a partnership with Simplivity which as an alternative hyper-converged solution. As you can imagine there might be some conflict of interest between the new hyper-converged solution of VMware and proprietary solutions of these hardware partners (not yet available but may be in development). On the other hand it might also be the case that Cisco, HP and IBM (and other vendors) might step into EVO:RAIL in a second phase. It also took HP some additional time to have a VSAN ready node available.

Typical minimum hardware specs for EVO:RAIL are currently:

- Two Intel E5-2620 CPUs with six cores each;

- 192 GB of RAM;

- 3x 1.2 TB HDDs and 1x 400 GB SSD;

- 2x 10 Gbit NICs;

- 1x 1 Gbit NIC for management;

Notice these are minimum requirements, depending on exact customer hardware requirements the server configuration can change. Another important point is that you will need 10 Gbit networking top-of-rack switches. These switches have to support multi-cast including IGMP snooping & IGMP querier because of VSAN requirements. VSAN? Yes, VSAN is part of and used in the EVO:RAIL solution.

One EVO:RAIL building block consists of 4 servers contained in 2U of rackspace. You can connect 4 EVO:RAIL building blocks, resulting a maximum of 16 physical servers on one cluster. EVO:RAIL is available through the hardware vendor as a single SKU, you buy both the hardware & the VMware software.

Let's now zoom in on the software included in EVO:RAIL.

EVO:RAIL Software Components

On top of the hardware EVO:RAIL uses standard VMware software, consisting of:

- VMware vSphere 5.5 including the vCenter Server Appliance;

- Using VMware VSAN for storage;

- VMware LogInsight;

- The VMware Hyper-Converged Infrastructure Appliance;

Notice that NSX is not part of EVO:RAIL, there has been some confusion about this. EVO:RAIL is actually using standard vSwitches! That's right, it's even not using the VMware's vDistributed Switch (vDS) because of availability concerns regarding the vCenter Server Appliance (VCSA). The VCSA is required for managing a vDS but also runs on the EVO:RAIL building block. This might lead into a "chicken and egg problem" in case the VCSA is not available because of a (network) problem.

The EVO:RAIL Hyper-Converged Infrastructure Appliance

The magic of EVO:RAIL happens in the Hyper-Converged Infrastructure Appliance (HCIA). The HCIA is responsible for the initial deployment, management and monitoring of your EVO:RAIL building block including the virtual machines running on it. The HCIA puts an extra, simplified management layer on top of the vSphere infrastructure running underneath. The HCIA presents a lean HTML5 based management interface to the user.

![evorail01]() After putting your EVO:RAIL block in the rack and starting the HCIA, a wizard is presented to configure the solution. The HCIA is responsible for configuring your ESXi hosts, vCenter Server and LogInsight and uses VMware and standard OpenSource components for these tasks. The HCIA requires IPv6 and mDNS to discover its peers.

After putting your EVO:RAIL block in the rack and starting the HCIA, a wizard is presented to configure the solution. The HCIA is responsible for configuring your ESXi hosts, vCenter Server and LogInsight and uses VMware and standard OpenSource components for these tasks. The HCIA requires IPv6 and mDNS to discover its peers.

At the end of the deployment EVO:RAIL will do a comprehensive check of the final configuration. If any problem occurs, EVO:RAIL will allow you to fix your configuration.

The initial configuration of EVO:RAIL only takes around 15 minutes.

Each EVO:RAIL building block, consisting of 4 servers, requires a HCIA. A maximum of 4 HCIA's can be tied together resulting in an EVO:RAIL configuration of 16 ESXi hosts running in one VMware cluster. You need one vCenter Server Appliance for this configuration.

![evorail07]() After you've deployed the configuration, it's time to deploy some virtual machines. This can also be achieved through the interface of the HCIA.

After you've deployed the configuration, it's time to deploy some virtual machines. This can also be achieved through the interface of the HCIA.

EVO:RAIL offers three VM sizes: small, medium and large. The specific configuration of each VM size can be adjusted according to your own requirements.

According to some tests conducted by VMware, it's expected you can run 250 Horizon View desktops (2 vCPUs, 2 GB RAM, 30 GB disk) on a 4 node EVO:RAIL cluster, or about 100 server VMs (2 vCPUs , 6 GB RAM, 60 GB disk).

![evorail10]() The HCIA offers some dashboards for monitoring, these dashboards are specific to EVO:RAIL and not using vCenter Operations Manager/vRealize Operations. The dashboards offer some information on how your cluster is performing and focusses on the counters specific for EVO:RAIL.

The HCIA offers some dashboards for monitoring, these dashboards are specific to EVO:RAIL and not using vCenter Operations Manager/vRealize Operations. The dashboards offer some information on how your cluster is performing and focusses on the counters specific for EVO:RAIL.

If necessary you can still use the vSphere (Web)Client for managing your infrastructure. Because the HCIA doesn't save any information locally (and uses just standard VMware APIs), changes using one of the vSphere clients will show up in het HCIA interface as well.

Another interesting new feature is that EVO:RAIL offers seamless upgrades for the environment. It doesn't use VMware Update Manager for this, the upgrade feature is part of the HCIA which is pretty cool I think.

Q&A

At the end of session SDDC1337 (presented by Dave Shanley and Duncan Epping), which I visited, there was some time for the attendees to ask questions. For your reference I've included these questions and corresponding answers in this article.

Q: Is the Cisco Nexus 1000V supported as a virtual switch for EVO:RAIL.

A: No, currently only standard vSwitches are supported, even the vDistributed Switch by VMware is not supported [and I think there are no plans to support it in the future, see my comment on this above]. Support for the Nexus 1000V might come in a future version.

Q: How to deal with hardware/firmware upgrades, is this included in EVO:RAIL?

A: Hardware/firmware management is currently not part of EVO:RAIL and/or the HCIA.

Q: Can you mix hardware vendors in a EVO:RAIL environment/cluster?

A: Although this might be possible, it is certainly not advised. The question is: why do you want this? In a normal environment it's not common practice to mix servers of different hardware vendors in one cluster.

Q: What is the usable capacity of the VSAN datastore taking into account the minimum hardware requirements [see above]?

A: This depends on the exact configuration, but expect somewhere between 12.1 and 13.3 TB. [Notice: eventual VSAN capacity also depends on configured policies in regards to your availability requirements]

Q: EVO:RAIL minimum requirements are mentioned during the presentation [and included in this article], can we expect other hardware configuration options?

A: Yes, although exact offerings depend on the hardware vendor and may vary.

Q: Can you import existing virtual machines into EVO:RAIL?

A: Yes you can, these new VMs will show up in the EVO:RAIL/HCIA interface immediately.

Q: Is it possible to import/connect EVO:RAIL into an existing vCenter Server?

A: Yes, this is possible.

Some additional Q&A on VMware EVO:RAIL is in this article by René Bos. Marcel van den Berg's article on EVO:RAIL is also worth a read.

The post Live from VMworld 2014: A closer look at Hyper-Converged & VMware EVO:RAIL appeared first on viktorious.nl - Virtualization & Cloud Management.

After putting your EVO:RAIL block in the rack and starting the HCIA, a wizard is presented to configure the solution. The HCIA is responsible for configuring your ESXi hosts, vCenter Server and LogInsight and uses VMware and standard OpenSource components for these tasks. The HCIA requires IPv6 and mDNS to discover its peers.

After putting your EVO:RAIL block in the rack and starting the HCIA, a wizard is presented to configure the solution. The HCIA is responsible for configuring your ESXi hosts, vCenter Server and LogInsight and uses VMware and standard OpenSource components for these tasks. The HCIA requires IPv6 and mDNS to discover its peers.

Of course you already *love* the vSphere WebClient, don't you? Probably not...but wait, there's the new WebClient! It's very much improved: improved login time, faster right click menu, faster performance charts. Also from a usuability perspective things have improved; the recent tasks pain is moved to the bottom and the right click menus are flattened. Also the Virtual Machine Remote Console (VMRC) has improved and looks more or less the same as the VMRC in the Windows vSphere Client.

Of course you already *love* the vSphere WebClient, don't you? Probably not...but wait, there's the new WebClient! It's very much improved: improved login time, faster right click menu, faster performance charts. Also from a usuability perspective things have improved; the recent tasks pain is moved to the bottom and the right click menus are flattened. Also the Virtual Machine Remote Console (VMRC) has improved and looks more or less the same as the VMRC in the Windows vSphere Client.

With the new High Density Direct Attached Storage (HDDAS) option it's now possible to use VSAN in a effective way in blade environments. Both SSDs and HDDs are supported in a HDDAS configuration, as well in combination with local flash devices in the (blade) servers.

With the new High Density Direct Attached Storage (HDDAS) option it's now possible to use VSAN in a effective way in blade environments. Both SSDs and HDDs are supported in a HDDAS configuration, as well in combination with local flash devices in the (blade) servers.

2015 results[/caption]

2015 results[/caption] 2014 results[/caption]

2014 results[/caption] 2015 results[/caption]

2015 results[/caption] 2014 results[/caption]

2014 results[/caption]

My configurationis:

My configurationis:

VXLAN, VTEPS? What is VXLAN exactly doing in an NSX implementation?

VXLAN, VTEPS? What is VXLAN exactly doing in an NSX implementation? group on the underlying distributed virtual switch to a port on the distributed logical router. The DLR is connected to a VLAN Logical Interface (VLAN LIF) in that case.

group on the underlying distributed virtual switch to a port on the distributed logical router. The DLR is connected to a VLAN Logical Interface (VLAN LIF) in that case.

At VMworld it's not only VMware that's announcing new stuff.

At VMworld it's not only VMware that's announcing new stuff.